Hi,

I am creating a POC of Kong api gateway to maybe present it to my company in the future.

I was surprised that I had long latency when I call Rest api passing by Kong.

I am trying Kong on Docker.

I set up it in many different ways using this documentation. I tried in de-less mode or with postgres.

Then I tried with docker-compose file I created.

Then I tried with an existing docker-compose found on Github: docker-kong/compose at master · Kong/docker-kong · GitHub

With all these cases, I tried in db-less mode and with Postgres.

Wy backend is a set of 3 really light micro services. Just 3 Crud with no database, but a HashMap in implementation en Java Spring-boot.

When I call these services directly. The answers are in milliseconds.

When I pass by Kong, the first call to each API can be 12s. second call are fast. I guest that there is a cash somewhere.

I also tried to install Kong on my computer directly. In that case, no issue, it works fast.

With docker-compose I generally sprit my docker-compose file in 2. One for Kong and one for my demo app. In that case I specify that they use the same kong-net network.

The kong container don’t- seem to be overloaded.

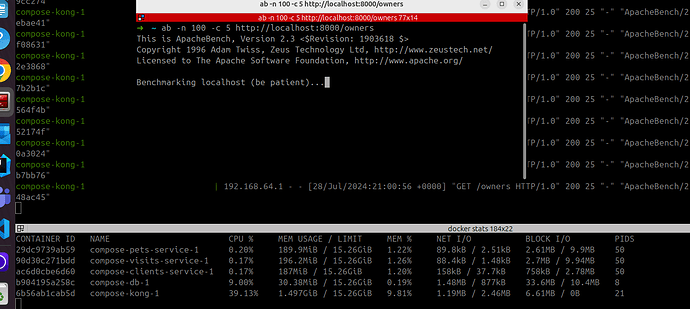

I did a docker stats meanwhile I launch many request using the ab command

I also tried, like in the documentation, to make a demo route to the site http://httpbin.org.

Calling localhost:8000/mock/anything need fore than 4s to answer

My OS is Ubuntu 24.04.

Docker version: 26.1.3

Kong version : latest, But I also tried with 3.7.1.2, 3.2.1.0, 2.7.2-alpine and 2.7.2.0-alpine

To reproduce my case, you can use this compose file docker-kong/compose at master · Kong/docker-kong · GitHub and make a route to httpbin.org.

I hope this information will help to find this issue.

Don’t hesitate to ask for more information.

Thank you,

Jérémie Gudoux