I have Keycloak behind Kong Ingress Controller.

I 'm able to see keycloak welcome page at my {url}/auth/. However, when I click at Administration Console I am redirected to {url}:8000/auth/admin/master/console/

When I click at Administration Console I should be redirect to {url}/auth/admin/master/console/

Version of Helm and Kubectl:

- Helm v2.13.1

- kubectl:

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:11:31Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.9", GitCommit:"16236ce91790d4c75b79f6ce96841db1c843e7d2", GitTreeState:"clean", BuildDate:"2019-03-25T06:30:48Z", GoVersion:"go1.10.8", Compiler:"gc", Platform:"linux/amd64"}

What you expected to happen:

When I click at Administration Console I should be redirect to {url}/auth/admin/master/console/

How to reproduce it (as minimally and precisely as possible):

- Create a kubernetes cluster with kops in AWS

- Installing Kong Ingress Controller executing the following command:

helm install --name kong-develop --namespace develop stable/kong \

--set ingressController.enabled=true

- Create an AWS Classic Load Balancer following the instructions bellow:

3.1 Basic Configuration

- Load Balancer name: KongDevelopLB

- Create LB Inside: Choose your cluster VPC

3.2 Listeners

| Load Balancer Protocol | Load Balancer Port | Instance Protocol | Instance Port |

|---|---|---|---|

| TCP | 80 | TCP | kong-proxy NodePort service |

| TCP | 443 | TCP | kong-proxy TLS NodePort service |

| TCP | 8444 | TCP | kong-admin NodePort service |

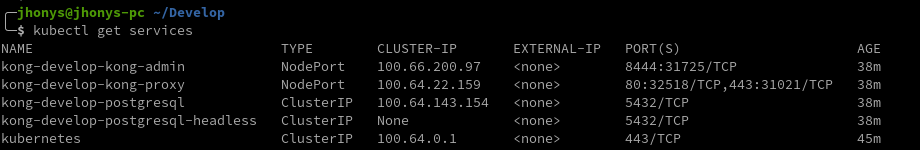

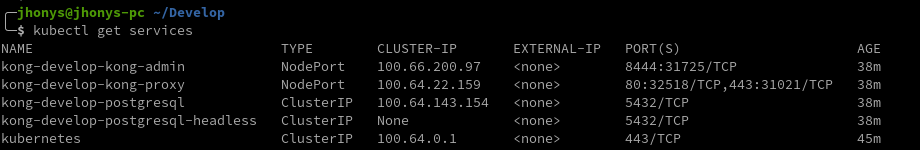

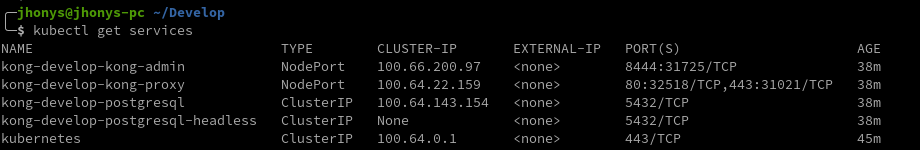

To help you detect the correct ports, take a look at the example image bellow:

In the example cluster shown in the image above:

- Load Balancer port 80 should point to instance port 32518;

- Load Balancer port 443 should point to instance port 31021;

- Load Balancer port 8444 should point to instance port 31725.

3.3 Select Subnets

- Select Subnets: Select the cluster available subnets

3.4 Security Group

- Assign a security group: Create a new security group

- Security group name: KongDevelopLB-SecurityGroup

| Type | Protocol | Port Range | Source |

|---|---|---|---|

| Custom TCP Rule | TCP | 80 | Anywhere |

| Custom TCP Rule | TCP | 443 | Anywhere |

| Custom TCP Rule | TCP | 8444 | Anywhere |

3.5 Configure Health Check

Health Check should point to kong-admin NodePort service. To help you find the correct port take a look at the example image bellow.

Based on the image bellow health check should point to 31725 port.

3.6 Add EC2 Instances

Add only the node instances.

3.8 Bind Load Balancer and Cluster

Edit the node cluster Inbound security group adding the following rules:

| Type | Protocol | Port Range | Source | Description |

|---|---|---|---|---|

| Custom TCP Rule | TCP | kong-admin NodePort service | Custom - the KongDevelopLB security group id | kongDevelopLB |

| Custom TCP Rule | TCP | kong-proxy NodePort service | Custom - the KongDevelopLB security group id | kongDevelopLB |

| Custom TCP Rule | TCP | kong-proxy TLS NodePort service | Custom - the KongDevelopLB security group id | kongDevelopLB |

Example:

Based on the image above:

- kong-admin NodePort service is 31725.

- kong-proxy NodePort service is 32518.

- kong-proxy TLS NodePort service is 31021.

3.9 Create an alias to the Load Balancer

In Route53 service, create an alias record set inside inside cluster hosted zone to point to kong Load Balancer.

Example:

If your cluster DNS name is kubernetes.mydomain.com

you could create an alias record set like this: develop.kubernetes.mydomain.com

- Installing keycloak behind Kong Ingress

4.1 Create a values.yaml file with the content bellow:

init:

image:

pullPolicy: Always

keycloak:

username: admin

password: admin

readinessProbe:

timeoutSeconds: 60

service:

type: NodePort

persistence:

deployPostgres: true

dbVendor: postgres

ingress:

enabled: true

hosts:

- develop.kubernetes.mydomain.com

extraEnv: |

- name: PROXY_ADDRESS_FORWARDING

value: "true"

4.2 Install keycloak using the values.yaml files craeted in step 4.1

Execute the code bellow:

helm install --name keycloak-develop --namespace develop codecentric/keycloak --values values.yaml

4.3 Access keycloak

Open the browser and access the following url: http://develop.kubernetes.mydomain.com/ and you gonna be redirected to http://develop.kubernetes.mydomain.com/auth/

4.4 Access the Administration Console

Click at the Administration Console link and you will be redirected to https://develop.kubernetes.mydomain.com:8443/auth/admin/master/console/

Further informations:

When I install keycloak (with helm) on minikube exposing the the service as a NodePort service without using ingress and load balancer I’m able to access Administration Console page.